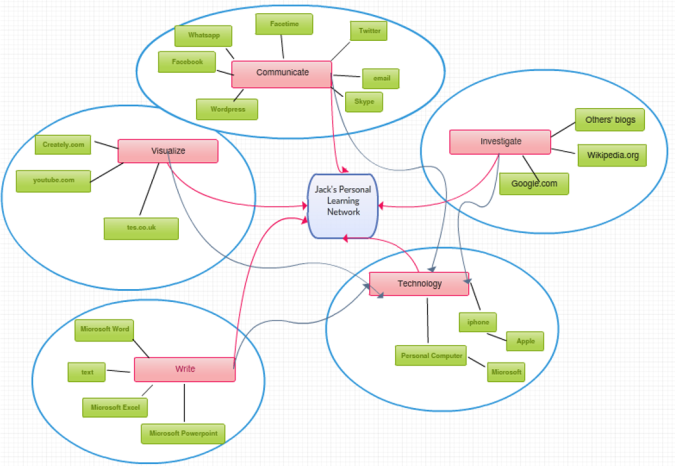

This brief post will explore the thoughts of Laura Czerniewicz around the topic of the future of teaching and learning, particularly relating to the importance of contestation and equality. Czerniewicz is Associate Professor at the University of Cape Town and the director of its Centre for Innovation in Learning and Teaching. In her recent podcast presenting her concerns for the future of teaching and learning, she acknowledged that no aspect of them is neutral – it is all political and ideological. When we teach something to someone, no matter the topic, we are always coming at it with our own points of view that colour how we present it. When we learn something, we always perceive it in light of our own views and pre-existing ideas.

I think that this is what Czerniewicz means when she explains that teaching and learning are never neutral. However, I believe that this notion of always approaching what we teach or what we learn in light of our own viewpoints is what enables us to be critical. We link what we present or what we hear to what we already know, trying to assimilate it into our pre-existing world view – whether it backs it up or is at odds with it.

For Czerniewicz, the politics and ideology of teaching and learning are particularly pertinent because of the country she lives in. As she says in her podcast, in South Africa, the wealth of two people equals that of half of the entire population. Immediately, issues of access and affordability in technology come to the fore. If there are varying levels of access to learning through the internet, wealthier people with more access will have more opportunity to be critical and engage with ideas and information which conflict with their own viewpoints than the poor. This is why what Czerniewicz insists upon regarding open access to knowledge is so important and why she vouches for open education in her podcast. The more open access to knowledge and the less internet access is hierarchical, the easier it will be to attain educational equality through the participation of technology in teaching and learning.